cooperative partner

合作伙伴

NEWS

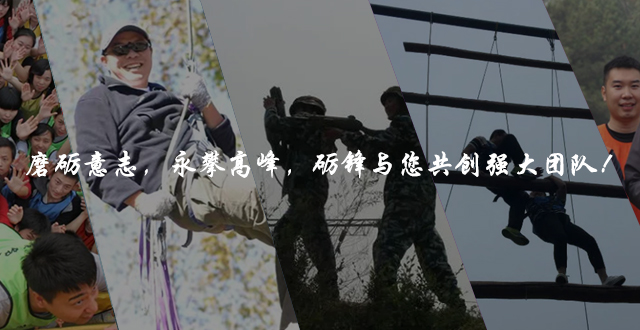

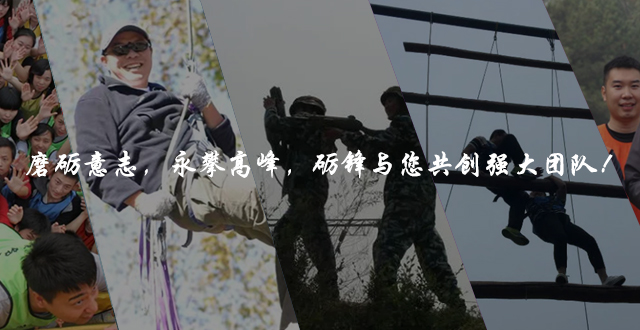

新聞中心 092022-10 戶外拓展培訓的具體意義是什么呢?

092022-10 戶外拓展培訓的具體意義是什么呢?

戶外拓展培訓可以增強團隊合作精神,訓練內容豐富生動,寓意深刻,以體驗啟發作為教...

232022-09 定向團建訓練對不同社會群體的意義

232022-09 定向團建訓練對不同社會群體的意義

一、企業:定向拓展訓練是一種能夠打造優秀團隊的項目。 1. 首先,定向拓展訓...

142022-09 拓展培訓的價值不可估量!

142022-09 拓展培訓的價值不可估量!

現今社會不僅僅需要儲備技能和知識的人才,更需要有良好心理素質的人才,公司HR或...

062022-09 如何選擇評估一個專業拓展培訓機構?

062022-09 如何選擇評估一個專業拓展培訓機構?

1、是否有國家權威機構的資格認證,營業執照范圍內是否有拓展培訓內容,能否開具拓...

012022-09 團隊團戰和野外拓展培訓的意義有哪些

012022-09 團隊團戰和野外拓展培訓的意義有哪些

團隊團戰培訓給參與者留下深刻印象,相互思想的出發點是互補的。這一成功來自于消防...

252022-08 野外拓展培訓的意義有哪些

252022-08 野外拓展培訓的意義有哪些

團隊團戰培訓給參與者留下深刻印象,相互思想的出發點是互補的。這一成功來自于消防...

13833193977